Fixing Lag: Module Lag - Why Not All Bugfixes Are A Good Idea

As we're all too painfully aware, when a few hundred pod pilots get together and exchange ammunition, the dreaded "lag monster" often comes around. There are a number of distinct phenomena that are referred to as lag. It is important to distinguish between them, as they typically have different causes/solutions (even if the symptoms seem the same, like "my guns didn't work"). This blog is about the type called "module lag," in which modules start to respond very poorly - sometimes for minutes late. Specifically, this blog is about the bug that causes this for repeats/deactivations, and why it's not fixed on Tranquility (TQ) yet.

At the Council of Stellar Management (CSM) summit in June, the CSM made an excellent presentation of the issues around fleet-fight lag, specifically about the issues of modules becoming ‘stuck' and the workarounds used by players. This provided useful insight into a problem which, until recently, we'd never been able to reproduce in a development environment. This made the problem very tangible, and gave us a great symptom to begin digging into.

Like all the best bugs, this one has layers. We'll start at the first thing we noticed when digging at it: the system responsible for telling the server when modules should be turned off or repeated would get minutes behind in processing when fleet fights happen while other systems remain reasonably responsive. This system, named Dogma, handles module activation/repeat/deactivation, as well as the actual effects of those modules. It also handles the various skill/ship modifiers you see in the game.

This sort of lopsided performance should be easily tweaked. Tasks on the EVE servers use a time-sharing technique called cooperative multitasking which, in short, means that a task has to willingly yield execution to other tasks, otherwise it will run forever. In this case, it would seem the part of Dogma handling module repeat and deactivation was being too nice - yielding execution too much.

Looking at the code some, a theory emerged as to why. There was an error case that stuck out as odd - if an effect was supposed to be stopped or repeated, but the effect system itself didn't agree that it was time yet, the code would throw up its hands and give up. If that error case gets hit, the processing loop would yield to other systems early. A code comment was very reassuring though - this error was supposedly "rare."

The "rare" error happened 1.5 million times in the month of June, 2010 on TQ.

Above: A re-enactment of my expression after learning this fact

So, there's the first layer. This error happens, Dogma doesn't finish what it wanted to do (process every module event that should happen), the work load backs up and your modules start taking minutes to respond. The typical thing to do here would be to run a test to verify this. Unfortunately at that point the only environment we had to test on was the Singularity server mass tests. So, off we go, putting in a setting to test to see if simply continuing past this error and finishing processing would be enough to alleviate the module repeat/deactivation problem. There was a solid chance that it would.

Participants of the July 15th mass test: this is what I tested that made the node go horribly, horribly wrong. Instead of clearing through the errors and charging on to victory, the effects processing loop instead choked on them, constantly hitting them and not yielding execution to anyone else until minutes later. Interesting result. The next mass test was three weeks away, so back to the lab I went to cook up something to test then.

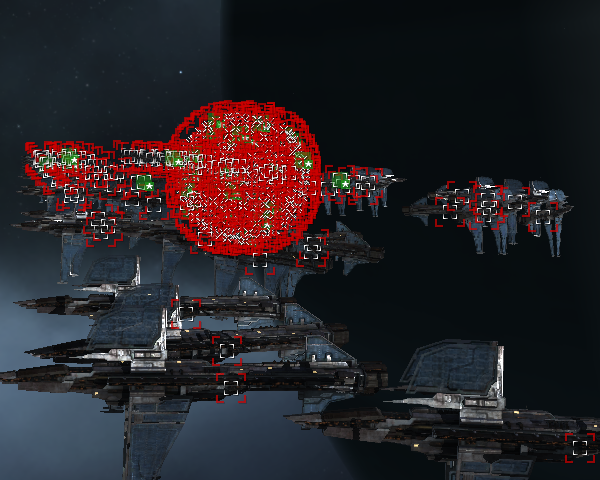

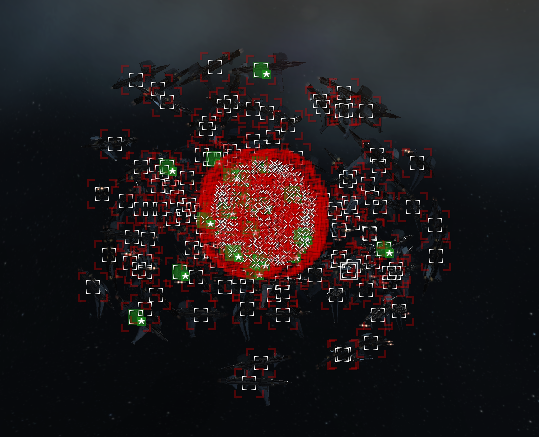

And then, our savior arrived. The Thin Client, as outlined in CCP Atropos' blog. These lovely little beasties, once properly tamed, allowed us to get a few hundred clients doing simple behaviors in the lab. First, there were lazors:

![]()

Then there were drones:

Then there was orbiting:

And then there was lag! The server I was running against was positively unhappy. But still, modules were reasonably fine - the error was not to be seen. (Remember what I said above about there being many manifestations of lag? Well this was a different sort, but not what we needed to reproduce the ‘stuck module' symptom) Some fantastic sleuthing by my teammate CCP Masterplan lead us to some simple steps that could be done to induce this error. Module delays quickly followed.

Once we have a reproduction in the lab that we can poke at, test new code on, and generally just play with, only the craziest bugs stand a chance. This one took only a couple hours to pin down once this setup was in place.

So layer two! The error, as you may recall from a few paragraphs up, was that Dogma was saying that the effect wasn't ready to be stopped or repeated yet. Well, why? The calculation is as easy as you'd think it would be - start time plus duration. It would take a lot to miscalculate that, so what the hell?

Turns out, the errors were coming about because the same effect was in the stop/repeat manager queue twice (or more). One of the copies would process fine, and then all of the duplicates would generate the error because the effect was already repeated by the first one that processed fine. So, duplicates in the manager caused the error, the error caused the process loop to yield early, the process loop yielding early caused module problems.

Next up, we put together a test to see if enforcing that there be no duplicates in the manager queue fixes the error, and therefore the module problem. Cue the thin clients, they happily pew pew themselves for a few hours while we get the code right, and the result is a positive - fixing this layer does fix the symptom.

But good engineers don't just fix a problem once, you fix it at as many layers as you can. At this point, we've prevented duplicate effects from entering, but they shouldn't be trying to get in there in the first place. A quick trace dropped in where the duplicate is requested pointed us right at the top layer of this bug. The code that's responsible for making an entity (a drone, an NPC, things like that) go docile was calling the very same function that the stop/repeat manager was calling to stop its effects! So specific timing cases, which are rare under normal circumstances but extremely common in high-load situations, were causing the effect to repeat instead of stop. One quick tweak there and the top layer is fixed as well.

Thin client tests confirmed that fixing either layer fixes the symptom. Cue the mass test!

The August 5th mass test had three rounds of combat in order to help us identify the impact of these bug fixes. The first round had the fixes turned off, and the second with them turned on, no difference in style of fighting. The fixes did exactly what they intended to do - repeating/disabling effects went from being 155 seconds behind at the height of round one to never getting more than seven seconds behind. Success!

Well, almost. As anyone who was at that test would tell you, the game was in bad, bad shape. If you could get a lock and get a module to start repeating, it would repeat with gusto, but it rarely actually happened. Locking took minutes, same with turning on or off a module. Even warping away took a very long time.

There's two problems evident from that test. Firstly it's clear that while relying on a bug to yield execution from this loop is a bad idea, not yielding at all isn't a good plan either. Secondly, and more importantly, reasonable numbers of pilots can generate enough load for the effects system that it can't keep up. That's a dangerous game, taking more than one second to process one second worth of effects - it quickly leads to doing nothing but process effects. That's what we started to see happen in the second phase of combat, which is scary business.

In the third phase we wanted to see where the tipping point was, so we started off with a decreased load by requesting no drones. Everything was peachy, the system could keep up just fine. Adding drones in doesn't add very much load, but it was enough to tip the effects system over the edge of being able to keep up. We were able to find where that tipping point was, and that will help guide what we do for TQ in the short term.

Essentially what we have to do is re-introduce yielding in the effect processing queue, only under our control instead of at the whim of some defect. It will be after a configurable amount of time, and I'll be present at some big fights to try to tune in on where that value should be to balance the needs of processing effects versus every other system. Longer term, we're working on some designs that could allow the effects system to keep up under very high load, which should finally put the nail in the coffin on module response issues.

In the meantime, we've been pointing the thin clients at various hotspots of performance and tackling them. More on that as soon as the results are out for you to enjoy!

~CCP Veritas

PS - Big shout out to the CSM for their help to date pin pointing specific issues like this one and notifying us of fights as they happen so we can be there monitoring. Also, thanks to all the players turning out for the Singularity mass-tests. Much love~

Till then, toodles.